How I Made an AI Agent Modify My Website Through Telegram Messages

This article presupposes you have:

- Basic knowledge of AI and Large Language Models (LLMs)

- Familiarity with AI agents and automated workflows

While this article explores the core concept of using AI to automate code changes through chat messages, it focuses more on the high-level approach rather than specific implementation details. The actual implementation can vary based on:

- Your specific requirements

- Technology stack preferences

- Existing infrastructure

Consider this an exploration of possibilities rather than a step-by-step tutorial.

Friday Night, May 30th, 2025

I was lying in bed, trying to fall asleep, browsing the new shadcn/ui website on my phone. I noticed their sleek transparent full-width navigation bar and thought it would look great on my website. Since I was already comfortable in bed, it felt too late to get up and make the change.

I thought to myself:

"I wish I could just send a message to an AI agent and have it make the change for me."

That simple thought wouldn't leave my head. And honestly, it was too fun to ignore.

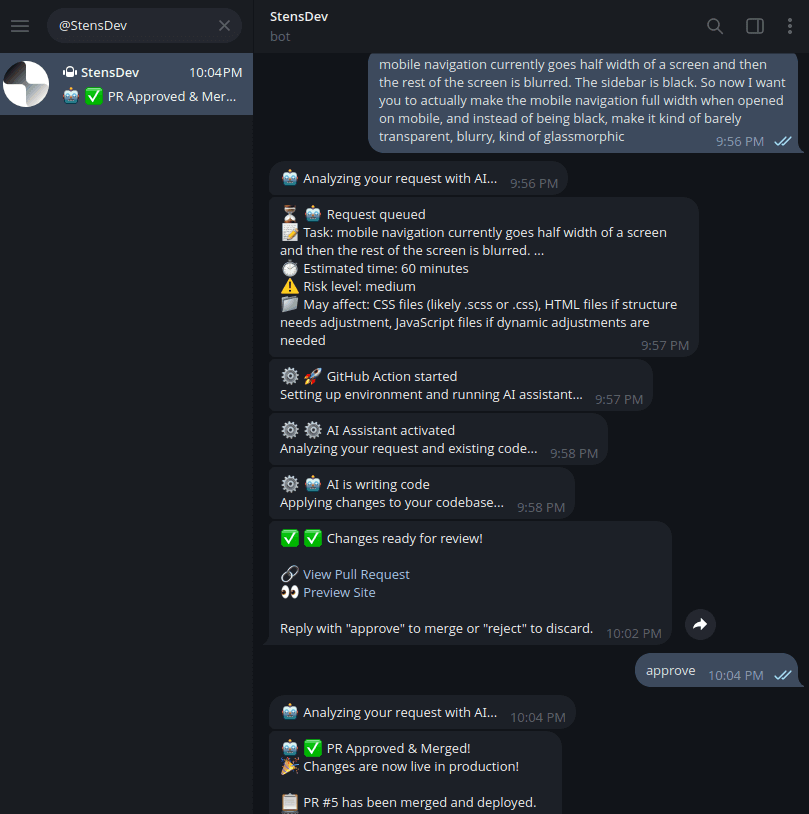

This next screenshot showcases my actual complete conversation with the bot.

The complete conversation: from request to deployment

Saturday Morning, May 31st, 2025

I woke up early with that idea still bouncing around in my head. Instead of doing any proper research on existing solutions or carefully planning out the architecture, I just dove in. I had a simple goal: I wanted to text something like "make the navigation transparent" and have an AI agent actually implement it. Everything else would figure itself out along the way.

So, What Am I Trying to Build?

Here's the ideal workflow I had in mind: you text a Telegram bot saying "Restyle the mobile navigation by making it transparent" and it will:

- Understand your request using AI

- Automatically write the code using AI

- Create a pull request on GitHub

- Deploy a preview version of your site

- Let you approve or reject the changes

- Deploy to production if approved

Simple in concept, but as I quickly learned, there were quite a few moving pieces to orchestrate.

Step 1: Creating the Bot That Would Listen

I started with the most obvious entry point - I needed a way to send messages to an AI. After a quick search, Telegram seemed like the path of least resistance.

Why Telegram, you might ask?

I had never actually used Telegram before, but I had heard that they had minimal requirements for developers to get started with their API. This was unlike WhatsApp, which requires a business account and has many other prerequisites for creating a bot.

Creating a new Telegram bot took less than 5 minutes. You simply open the app, message @BotFather, and ask to create a new bot. Follow the prompts to add the details, and you're good to go. Once done, you'll get a token that you'll need to use in your code to authenticate your bot.

Save the bot token, you'll get something like: 1234567890:ABCdefGHIjklMNOpqrsTUVwxyz

So now I had a bot that could receive my messages. But that was just the beginning - I needed somewhere for the AI processing to actually happen. The bot was essentially just a messenger; the real magic would need to happen elsewhere.

Step 2: Figuring Out Where the AI Would Live

After creating the bot that would relay my messages to the AI, I needed to figure out where and how the AI processing would happen. This is where I had to make some architectural decisions.

I remembered seeing Cloudflare's new AI services in my X feed, and I thought this could be a perfect opportunity to test them out while building something useful. The timing felt right - they had recently announced Workers AI, and I was curious to see how it would perform in a real project.

So, I decided to base my architecture on Cloudflare Workers AI.

What are Cloudflare Workers?

Think of Cloudflare Workers as tiny pieces of code that run all over the world. Instead of having your code sit on a single server somewhere, Workers run on Cloudflare's network of data centers spread across more than 200 cities. When someone uses your app, their request gets handled by the Worker closest to them, making everything super responsive.

I picked Workers for this project because:

- It's quick to get started: You can have code running in literally seconds

- AI built-in: They have AI models ready to go through Workers AI

- Good memory: Durable Objects help the bot remember conversations

- Task handling: Built-in queues for when things take a while

- Plays nice with bots: Perfect for receiving messages from Telegram

And all of that can be managed through their Wrangler CLI. Deploying code is as simple as running wrangler deploy.

Key Insight: The serverless approach with Cloudflare Workers means no server management, automatic scaling, and global distribution. Perfect for a side project that might get zero traffic one day and thousands of requests the next! Just be careful with the pricing, it's not free.

But there was still a problem I needed to solve: how would the bot remember our conversation? I didn't want to start from scratch every time I sent a message.

Step 3: Giving the Bot Memory

What are Durable Objects?

A Durable Object is a special kind of Cloudflare Worker that combines compute with storage, making previously stateless Workers stateful.

They're perfect for this project because they allow the bot to remember conversations and maintain consistent state across distributed requests. So when I send "approve" after reviewing changes, the bot knows exactly what I'm approving.

More info: Durable Objects

With the infrastructure sorted out, I could finally get to the exciting part - making the bot actually intelligent.

Step 4: Making the Bot Intelligent with AI

This is where things got really interesting. Instead of building a basic command bot that only responds to exact keywords, I wanted something that could actually understand what I meant. Cloudflare Workers AI gives you access to over 50 different AI models, which meant I could experiment with different approaches.

The first challenge was teaching the bot to understand intent. When I send a message, is it a code change request? Am I approving something? Or am I just asking a question about the codebase?

Teaching the Bot to Understand Intent

My next task was making the bot understand the difference between "Restyle the mobile navigation by making it transparent," "approve," and "What files handle authentication?". I used Llama 3.1 for this:

const intentAnalysis = await env.AI.run('@cf/meta/llama-3.1-8b-instruct-fast', {

messages: [

{

role: 'system',

content: 'Classify this message as: code_change, approve, reject, question, or other'

},

{

role: 'user',

content: userMessage

}

]

});Once the bot could understand what I wanted, I realized there was another step I could add that would make the whole experience feel more professional - complexity analysis.

Analyzing Code Complexity Before Writing Any Code

Before moving on to the actual codebase editing, the ai worker analyzes the request to estimate complexity and time. I used Qwen 2.5 Coder (a specialized coding model) for this:

const codeAnalysis = await env.AI.run('@cf/qwen/qwen2.5-coder-32b-instruct', {

messages: [

{

role: 'user',

content: `Analyze this request: ${request}`

}

]

});This gives me estimates like:

- Complexity: simple/medium/complex

- Time estimate: 3-15 minutes

- Risk level: low/medium/high

- Affected files: Which parts of the codebase might change

All of this happens before any actual code generation.

Currently, these initial estimates are more of a "guess-timate", a bit of a fun flair in the current setup! The main reason for this is that, much like human developers needing context, the AI model performing this first-pass analysis on the edge doesn't yet have access to the specifics of the codebase, so it can't make an educated guess.

However, this is an interesting area for possible future refinement. We could potentially improve these estimates by tweaking the workflow. For instance:

- Two-Stage Analysis: The initial edge analysis could trigger a lightweight process (perhaps a separate, quick GitHub Action or a more sophisticated edge function with limited codebase access) to fetch some high-level context about potentially relevant code sections. This context could then feed into a refined complexity analysis before the main code generation kicks off.

- Vector Embeddings: For a more advanced setup, one could imagine maintaining vector embeddings of the codebase that the edge worker could query. This would allow the initial AI analysis to retrieve semantically similar code snippets, providing valuable context for a more accurate upfront assessment.

- Agentic Retrieval: We could use traditional search techniques in real-time, applied as tool calls to the LLM, instead of precomputed embeddings.

For now, the detailed codebase interaction happens later, when the GitHub Action gives an LLM assisted coding tool like Aider full access to make the actual code changes. But it's exciting to think about how these preliminary steps could become even smarter!

At this point, I had a bot that could understand my messages and analyze them. But I still had a fundamental problem: the Cloudflare Worker running on the edge couldn't access my private GitHub repository. I needed a way to bridge that gap.

Step 5: The Workflow - From Message to Merged Code

Here's where everything comes together, and where I had to solve the trickiest part of the whole system. When I send "Restyle the mobile navigation by making it transparent" to my bot, here's what actually happens behind the scenes:

1. Instant AI Analysis (Cloudflare Worker)

The message hits my Worker, which immediately:

- Classifies intent (is this a code change request?)

- Analyzes complexity

- Sends me a detailed response with estimates

So far, this all happens in milliseconds. But here's where I hit the first major architectural challenge.

2. The Handoff Problem and Queue-Based Processing

My Cloudflare Worker runs on the edge and can't access my private GitHub repository. But I didn't want to make the user wait while I figured out how to make the code changes. So instead of trying to do everything in one place, I split the workflow.

The Worker queues the request and responds immediately. Cloudflare Queues handle the async processing, ensuring nothing gets lost even if something crashes. This was crucial - I wanted the bot to feel responsive even though the actual code changes might take a few minutes.

3. GitHub Actions Takes Over

This is the handoff point. My Worker can't access my codebase (it runs on the edge), so it triggers a GitHub Action with all the AI analysis as context:

- name: Apply changes with Aider

uses: mirrajabi/aider-github-action@v1.1.0

with:

model: gpt-4o-mini

aider_args: --yes --message "${{ env.PROMPT }}"This was actually the piece that made everything click. Aider is the actual AI coding assistant that can read files, understand context, and make changes. It runs in the secure GitHub environment with full access to the repository, solving my access problem elegantly.

Aider link: Aider

4. The Magic: Automatic Pull Request

Once Aider makes the changes, the GitHub Action automatically:

- Creates a new branch

- Commits the AI-generated changes

- Opens a pull request

- Waits for Vercel to deploy a preview

This part felt like magic when I got it working. I'd send a message, and a few minutes later there'd be a fresh pull request with exactly the changes I asked for.

5. Preview and Approval

Then the bot sends me a message with the preview link and a request to approve or reject the changes:

Changes ready for review! 🔗 View Pull Request 👀 Preview Site

Reply with "approve" to merge or "reject" to discard.

I can click the preview link, see the new transparent navigation working on my actual site, and if I like it, just text back "approve". The bot remembers the context from our conversation and knows exactly what to merge.

The Before and After

And here's what the bot actually accomplished when I asked it to make the navigation transparent:

Original: Black navigation covering content

After AI changes: Transparent glassmorphic navigation

Now does this look as well as I want it to, absolutely not, it's an amateurish attempt at what inspired me to build this. I will probably redo it in the near future, but that is beside the point. The point of this experiment was the orchestration of the entire process.

If I had made a more detailed prompt, Aider would have been able to make the changes with more precision.

For now though, I'm leaving it as is - when you see the transparent mobile navigation bar, just know it's the end product of this fun experiment.

The Final Step: GitHub PR Confirmation

And just like that, the entire loop was complete:

GitHub automatically merges the AI-generated changes after approval

From "make the navigation transparent" to deployed code in production - all through text messages.

The production deployment happened automatically after the PR merge to main, since the site is hosted on Vercel with auto-deployment configured for the main branch. This completed the full circle from chat message to live website changes.

A Quick Note on Security

Given the power this AI agent has – direct access to modify the codebase and deploy changes – security is paramount. For this initial proof-of-concept, I implemented a basic security measure: restricting the Telegram bot to only accept commands from my specific chat ID.

However, beyond this, I haven't performed extensive security due diligence. Since the agent currently has a high degree of freedom and broad permissions, I'll be temporarily shutting down the live bot until I can implement more robust security protocols and safeguards. This is a critical step before considering any wider use or more complex integrations.

So Anyway... For Everyone Who Stuck Around to the End of This Post... You Get a Treat: Chocolate! 🍫

With looming clouds of singularity above us, I've been hedging my bets in a more delicious way. When the AI singularity inevitably makes all of us software developers obsolete, I'll be ready with my backup career: Chocolatier 👨🍳.

This is my recent obsession: hand-crafted chocolate bon bons that no AI (yet) can replicate.

So here's to the future: may our AI agents handle the deployment pipelines while we focus on the finer things in life, like perfecting the art of chocolate making.